So, setting up CPU and Memory limits for the containers in the Pod will helps us that only fair share of resources will be allocated by Kubernetes Cluster and will not affect other Pods performance in the Node.

Kubernetes uses following YAML request and limit structure to control container CPU, Memory resources

resources:

requests:

cpu: 100m

memory: 50Mi

limits:

cpu: 150m

memory: 100Mi

requests:

- This is the place to specify how much CPU and Memory required for a container. Kubernetes will only schedule it on a node that can give the specified request resources.

limits:

- This is the place to specify maximum CPU and Memory allowed to use by a single container. So, the running container is not allowed to use more than specified limits.

- limits can never be lower than the requests. If you try this, Kubernetes will throw an error and won’t let you run the container

- CPU is a “compressible” resource. It means when a container start hitting max CPU limit, it won’t be terminated from the node, but it will throttle it and gives worse performance.

- Memory is a “non-compressible” resource. It means when a container start hitting max Memory limit, it will be terminated from the node(Out of Memory killed).

Limits and requests for CPU resources measured in cpu units. One cpu in Kubernetes equivalent to

1 AWS vCPU (or) 1 GCP Core (or) 1 Azure vCore (or) 1 Hyperthread on a bare-metal processors.

CPU resources can be specified in both fractional and milli cores. i.e., 1 Core = 1000 milli cores

Ex: 0.2 equivalent to 200m

Kubernetes Memory Resource Units

resources:

requests:

cpu: 500m

memory: 200Mi

limits:

cpu: 1000m

memory: 400Mi

create following yaml content and save as "pod-cpu-memory-limit.yaml"

apiVersion: v1

kind: Pod

metadata:

name: pod-cpu-memory-limit

spec:

containers:

- name: nginx

image: nginx:alpine

ports:

- containerPort: 80

protocol: TCP

resources:

requests:

cpu: 100m

memory: 50Mi

limits:

cpu: 150m

memory: 100Mi

- name: alpine

image: alpine

command:

[

"sh",

"-c",

"while true; do echo date;sleep 10;done"

]

resources:

requests:

cpu: 50m

memory: 30Mi

limits:

cpu: 60m

memory: 50Mi

- First container (i.e. nginx) has request of 100m or 0.1 CPU and 50Mi memory, max limit of 150m CPU and 100Mi

- Second container (i.e. alpine) has request of 50m CPU and 30Mi memory, max limit of 60m CPU and 50Mi

So, Pod has total request of 150m CPU and 80Mi of memory, total max limit of 210m CPU and 150Mi of memory

run following query to get node capacity(i.e. total cpu and memory of the node) and allocatable(i.e. total resources allocatable for pods by the scheduler)

// check all worker nodes capacity

$ kubectl describe nodes

Capacity:

cpu: 4

memory: 7118488Ki

Allocatable:

cpu: 4

memory: 7016088Ki

// create pod

$ kubectl apply -f pod-cpu-memory-limit.yaml

pod/pod-cpu-memory-limit created

// display pods

$ kubectl get po

NAME READY STATUS RESTARTS

pod-cpu-memory-limit 2/2 Running 0

// view CPU and Memory limits for all containers in the Pod

$ kubectl describe pod/pod-cpu-memory-limit

Name: pod-cpu-memory-limit

Namespace: default

Containers:

nginx:

Image: nginx:alpine

Limits:

cpu: 150m

memory: 100Mi

Requests:

cpu: 100m

memory: 50Mi

alpine:

Image: alpine

Limits:

cpu: 60m

memory: 50Mi

Requests:

cpu: 50m

memory: 30Mi

// Pod will be in Pending state when we specify requests are bigger than node capacity

// Create Pod using imperative style

$ kubectl run requests-bigger-pod --image=busybox--restart Never \

--requests='cpu=8000m,memory=200Mi'

// display pods

$ kubectl get po

NAME READY STATUS RESTARTS

requests-bigger-pod 0/1 Pending 0

// Pod in pending status due to insufficient CPU. So, check Pod details

$ kubectl describe pod/requests-bigger-pod

Name: requests-bigger-pod

Namespace: default

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 28s default-scheduler 0/1 nodes are available: 1 Insufficient cpu.

// Pod created successfully when limits are bigger than node capacity

// Create Pod using imperative style

$ kubectl run limits-bigger-pod --image=busybox --restart Never \

--requests='cpu=100m,memory=50Mi' \

--limits='cpu=8000m,memory=200Mi'

// If you specify limits but do not specify requests then k8 creates requests which is equal to limits

$ kubectl run no-requests-pod --image=busybox --restart Never \

--limits='cpu=100m,memory=50Mi'

// view CPU and Memory limits

$ kubectl describe pod/no-requests-pod

Name: no-requests-pod

Namespace: default

Containers:

busybox:

Image: nginx:alpine

Limits:

cpu: 100m

memory: 50Mi

Requests:

cpu: 100m

memory: 50Mi

Kubernetes for Developers Journey.

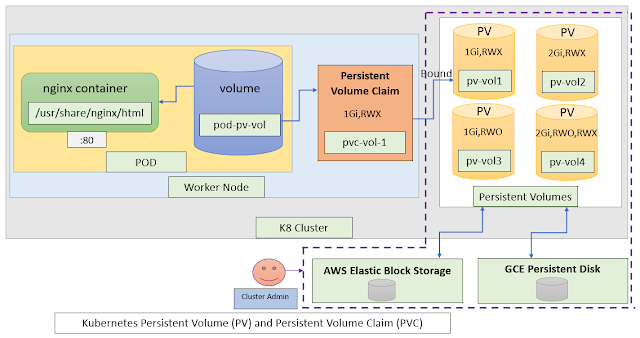

- Kubernetes for Developers #25: PersistentVolume and PersistentVolumeClaim in-detail

- Kubernetes for Developers #24: Kubernetes Volume hostPath in-detail

- Kubernetes for Developers #23: Kubernetes Volume emptyDir in-detail

- Kubernetes for Developers #22: Access to Multiple Clusters or Namespaces using kubectl and kubeconfig

- Kubernetes for Developers #21: Kubernetes Namespace in-detail

- Kubernetes for Developers #20: Create Automated Tasks using Jobs and CronJobs

- Kubernetes for Developers #19: Manage app credentials using Kubernetes Secrets

- Kubernetes for Developers #18: Manage app settings using Kubernetes ConfigMap

- Kubernetes for Developers #17: Expose service using Kubernetes Ingress

- Kubernetes for Developers #16: Kubernetes Service Types - ClusterIP, NodePort, LoadBalancer and ExternalName

- Kubernetes for Developers #15: Kubernetes Service YAML manifest in-detail

- Kubernetes for Developers #14: Kubernetes Deployment YAML manifest in-detail

- Kubernetes for Developers #13: Effective way of using K8 Readiness Probe

- Kubernetes for Developers #12: Effective way of using K8 Liveness Probe

- Kubernetes for Developers #11: Pod Organization using Labels

- Kubernetes for Developers #10: Kubernetes Pod YAML manifest in-detail

- Kubernetes for Developers #9: Kubernetes Pod Lifecycle

- Kubernetes for Developers #8: Kubernetes Object Name, Labels, Selectors and Namespace

- Kubernetes for Developers #7: Imperative vs. Declarative Kubernetes Objects

- Kubernetes for Developers #6: Kubernetes Objects

- Kubernetes for Developers #5: Kubernetes Web UI Dashboard

- Kubernetes for Developers #4: Enable kubectl bash autocompletion

- Kubernetes for Developers #3: kubectl CLI

- Kubernetes for Developers #2: Kubernetes for Local Development

- Kubernetes for Developers #1: Kubernetes Architecture and Features

Happy Coding :)