This problem can be solved by using external storage volumes like awsElasticBlockStore, azureDisk, GCE PD, nfs etc. However, developer must have knowledge on the network storage infrastructure details to use in the pod definition.

It means, when the developer wants to use awsEBS volume in the Pod, the developer should know the details of EBS ID and file type. If there is a change in the storage details, developer must make changes in all the pod definitions.

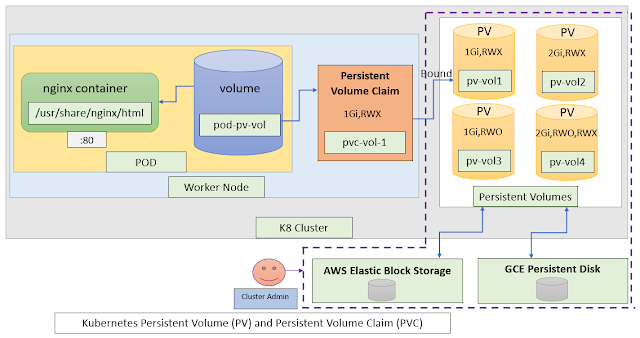

Kubernetes solves the above problem by using PersistentVolume and PersistentVolumeClaim. It decouples underlying storage details from the application pod definitions. Developers don’t have to know the underlaying storage infrastructure which is being used. It is more of cluster administrator responsibility.

As per diagram,

- PersistentVolumes(PV) are cluster-level resources like worker nodes. It not belonging to any namespace.

- PersistenVolumeClaims(PVC) can be created in a specific namespace only and it can be used by pods within same namespace only.

- Cluster Administrator sets up cloud storage infrastructure i.e., AWS Elastic Block Storage and GCE Persistent Disk as per the need.

- Cluster Administrator creates Kubernetes PersistentVolumes (PV) with different size and access modes by referring AWS EBS/GCE PD as per application requirements.

- Whenever pod requires persistent storage, Kubernetes Developer creates PersistentVolumeClaim (PVC) with minimum size and access mode, and Kubernetes finds an adequate Persistent Volume with same size and access mode and binds volume (PV) to the claim (PVC).

- Pod refers PersistentVolumeClaim (PVC) as volume whenever it is required.

- Once PersistentVolume is bound to PVC, it cannot be used by others until it is released (i.e., we must delete PVC to reuse PV by others).

- Kubernetes Developer don’t have to know the underlaying storage details. They just have to create PersistentVolumeClaim (PVC) whenever pod requires persistent storage.

The following access modes are supported by PersistentVolume(PV)

- ReadWriteOnce (RWO): Only single worker node can mount the volume for reading and writing at the same time.

- ReadOnlyMany (ROX): Multiple worker nodes can mount the volume for reading at the same time.

- ReadWriteMany (RWX): Multiple worker nodes can mount the volume for reading and writing at the same time.

- Delete: It deletes volume contents and makes the volume available to be claimed again as soon as PVC is deleted.

- Retain: PersistentVolume(PV) contents will be persisted after PVC is deleted and it cannot be re-used until Cluster Administrator reclaim the volume manually.

// Ex: creating aws EBS from cli

$ aws ec2 create-volume \

--availability-zone=eu-east-1a

--size=10 --volume-type=gp2 ebs-data-id

Cluster Administrator creates following PV by using ebs-id

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv-vol1

spec:

capacity:

storage: 10Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: ""

awsElasticBlockStore:

volumeID: ebs-data-id

fsType: ext4

For local testing, lets use hostPath PersistentVolume. Create a directory called “/mydata” and “index.html” file under “mydata” directory.

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv-vol1

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: ""

hostPath:

path: "/mydata"

As per above yaml, Volume configured at “/mydata” host directory with the size of 1Gi and an access mode of “ReadWriteOnce(RWO)

save above yaml content as "pv-vol1.yaml" and run the following kubectl command

// create persistentvolume(pv)

$ kubectl apply -f pv-vol1.yaml

persistentvolume/pv-vol1 created

// display pv

$ kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS

pv-vol1 1Gi RWO Retain Available

Here, status showing "Available". It means, PV is not yet bound to a PersistentVolumeClaim (PVC)

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc-vol-1

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 500Mi

storageClassName: ""

// create pvc

$ kubectl apply -f pvc-vol-1.yaml

persistentvolumeclaim/pvc-vol-1 created

// display pvc

$ kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES

pvc-vol-1 Bound pv-vol1 1Gi RWO

Here, PersistentVolumeClaim is bound to PersistentVolume i.e. pv-vol1

Next step is to create a pod to use persistentvolumeclaim as a volume

apiVersion: v1

kind: Pod

metadata:

name: pod-pv-pvc

spec:

containers:

- name: nginx

image: nginx:alpine

ports:

- containerPort: 80

protocol: TCP

volumeMounts:

- name: pod-pv-vol

mountPath: /usr/share/nginx/html

volumes:

- name: pod-pv-vol

persistentVolumeClaim:

claimName: pvc-vol-1

save above yaml content as "pod-pv-pvc.yaml" and run the following kubectl command

// create pod

$ kubectl apply -f pod-pv-pvc.yaml

pod/pod-pv-pvc created

// display pods

$ kubectl get po

NAME READY STATUS RESTARTS AGE

pod-pv-pvc 1/1 Running 0 1m

// syntax

// kubectl port-forward <pod-name> <local-port>:<container-port>

$ kubectl port-forward pod-pv-pvc 8081:80

Forwarding from 127.0.0.1:8081 -> 80

Forwarding from [::1]:8081 -> 80

$ curl http://localhost:8081

text message text Tue Nov 16 12:01:10 UTC 2021

We have successfully configured a Pod to use PersistentVolumeClaim as physical storage.

run the following kubectl commands to delete the resources

$ kubectl delete pod pod-pv-pvc

$ kubectl delete pvc pvc-vol-1

$ kubectl delete pv pv-vol1

Kubernetes for Developers Journey.

- Kubernetes for Developers #24: Kubernetes Volume hostPath in-detail

- Kubernetes for Developers #23: Kubernetes Volume emptyDir in-detail

- Kubernetes for Developers #22: Access to Multiple Clusters or Namespaces using kubectl and kubeconfig

- Kubernetes for Developers #21: Kubernetes Namespace in-detail

- Kubernetes for Developers #20: Create Automated Tasks using Jobs and CronJobs

- Kubernetes for Developers #19: Manage app credentials using Kubernetes Secrets

- Kubernetes for Developers #18: Manage app settings using Kubernetes ConfigMap

- Kubernetes for Developers #17: Expose service using Kubernetes Ingress

- Kubernetes for Developers #16: Kubernetes Service Types - ClusterIP, NodePort, LoadBalancer and ExternalName

- Kubernetes for Developers #15: Kubernetes Service YAML manifest in-detail

- Kubernetes for Developers #14: Kubernetes Deployment YAML manifest in-detail

- Kubernetes for Developers #13: Effective way of using K8 Readiness Probe

- Kubernetes for Developers #12: Effective way of using K8 Liveness Probe

- Kubernetes for Developers #11: Pod Organization using Labels

- Kubernetes for Developers #10: Kubernetes Pod YAML manifest in-detail

- Kubernetes for Developers #9: Kubernetes Pod Lifecycle

- Kubernetes for Developers #8: Kubernetes Object Name, Labels, Selectors and Namespace

- Kubernetes for Developers #7: Imperative vs. Declarative Kubernetes Objects

- Kubernetes for Developers #6: Kubernetes Objects

- Kubernetes for Developers #5: Kubernetes Web UI Dashboard

- Kubernetes for Developers #4: Enable kubectl bash autocompletion

- Kubernetes for Developers #3: kubectl CLI

- Kubernetes for Developers #2: Kubernetes for Local Development

- Kubernetes for Developers #1: Kubernetes Architecture and Features

Happy Coding :)